这是<Linux内核内存管理>系列的第八篇:

第一篇为内核内存管理过程知识点的的简单梳理

第二篇介绍了内核的数据结构

第三篇介绍了从内核第一行代码加载到跳转到C代码前的内存处理。

第四篇概览了初始化C代码中的内存处理

第五篇(上)和第五篇(下)介绍了Memblock和伙伴系统分配器

第六篇介绍了内存检测工具KFence工作原理

第七篇介绍了进程内存分配malloc的原理

mmap和munmap

mmap()的主要作用是将文件(普通文件或者设备文件)映射到进程的内存地址空间中,让应用程序可以以读写内存的方式来访问文件。与之对应的操作是munmap()。

一段示例代码来自维基百科如下:

1 |

|

上述代码的输出是:

1 | PID 22475: anonymous string 1, zero-backed string 1 |

主要作用是创建了两个匿名映射,父进程和子进程可以通过匿名映射来访问共享的内存。

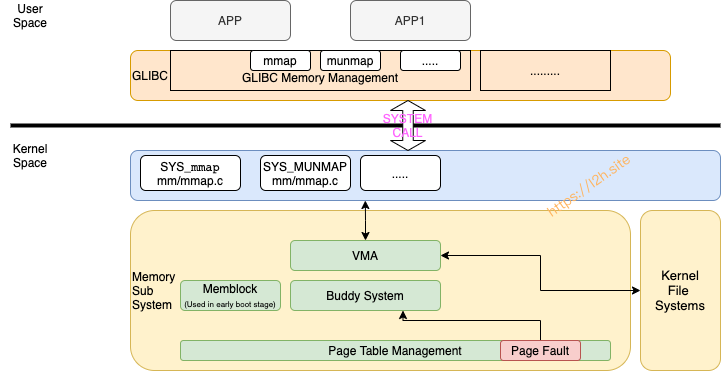

mmap和munmap的架构如图所示,与上篇文章中介绍的malloc()工作原理类似:

- 应用程序通过libc的API mmap()和munmap()来创建和销毁映射

- libc执行对应的系统调用SYS_mmap和SYS_munmap等

- mmap(),内核根据传入的地址,映射长度,文件信息进行VMA映射的创建

- munmap()则根据地址信息进行VMA映射的删除

内核实现mmap的核心函数是do_mmap():

1 | unsigned long do_mmap(struct file *file, unsigned long addr, |

该函数主要就是根据输入参数做一系列检查,并根据参数配置vm_flags,最终传入mmap_region()函数开始创建映射。

1 | unsigned long mmap_region(struct file *file, unsigned long addr, |

mmap_region()函数的实现也比较简单,这里不做过多解释。值得注意的是:如果传入的文件为空,则表示创建匿名映射。若连共享标记VM_SHARED也未指定,则与使用malloc()分配内存相同,仅为对应虚拟地址创建内存映射。

munmap()的内核实现仅为移除对应VMA映射,本文也不再做分析。

反向映射

反向映射的作用是给定物理页面,找到与其对应的所有进程的VMA。为什么会有这样的查找呢?这是因为所有进程的虚拟内存总大小往往远大于物理内存,为了支撑Linux系统的有效运作,内核在管理内存时,会将暂时不用的物理内存页换出到磁盘上,在有需要时再换入到内存中。

这种情况下,如何确定该物理内存有哪些进程正在使用?这便需要反向映射。

系统中内存页很多,在管理反向映射时,即使引入很小的数据结构,也会带来很大的额外内存开销。同时,因为反向映射使用比较频繁,也需要最优化查找效率,避免成为系统瓶颈。

回顾一下struct page,为了节省管理开销,其定义了很多联合体。其中与逆向映射有关的储存在mapping,_mapcount,index等成员中。

1 | struct page { |

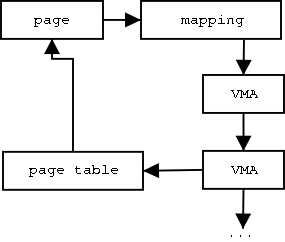

内核文档对这种映射有个直观的描述如下图:

简单一点讲:物理页结构体struct page使用mapping成员查找所有该页对应的VMA,从而找到所有正在使用该物理页的虚拟页。

mapping成员查找VMA的方法并非如上图那样容易理解。实际需要考虑很多情况,因此内核设计了如下数据结构:

1 | /* |

事实上,数据结构定义有描述为什么需要这样的数据结构而不是直接由mapping指向vma_area_struct。即:vm_area_struct可能会被合并、拆分等。

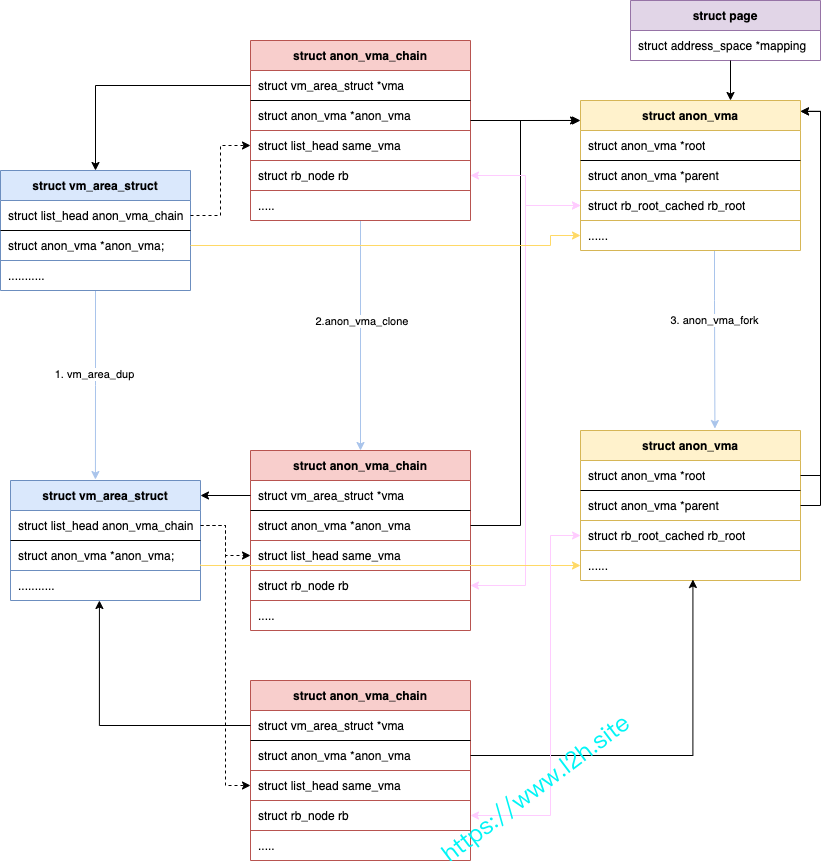

下图描述了当fork一个新进程时,反向映射相关字段的变化状况。

- Fork新进程会将每个VM area(vm_area_struct)进行复制

- 将每一个VM area的anon_vma_chain链表的anon_vma_chain进行复制,并与父进程的anon_vma关联

- 对每个VM area,创建新的anon_vma和anon_vma_chain,与父进程的anon_vma关联

这样的关联建立起来后,通过物理页结构体struct page就可以查找到所有的关联VM area。

结语

本文概要介绍了mmap和反向映射的原理。

- mmap主要用于用户空间态进程映射一段虚拟地址,用以共享、分配内存或者使用访问内存的方式来访问文件节点。

- 反向映射用于内核查找一个物理页面对应的所有虚拟地址,以便系统换页时使用。

内存管理系统内容纷繁,也是内核工作者集体智慧的结晶,笔者在理解时不免有遗失或者偏差之处。如您有问题或者建议,请留言提出讨论。